Introduction

In the ever-evolving landscape of cloud computing, security remains a paramount concern. As organizations increasingly migrate sensitive workloads to the cloud, the need for robust isolation mechanisms has given rise to innovative technologies. One such breakthrough is PodVM, a cornerstone of confidential computing in containerized environments. PodVM, short for Pod Virtual Machine, represents a paradigm shift in how Kubernetes pods are deployed and secured. By encapsulating pods within dedicated virtual machines, PodVM ensures hardware-enforced isolation, protecting against threats from untrusted infrastructure, malicious administrators, or compromised software.

The Foundations: Confidential Computing and Container Security

To appreciate PodVM, we must first contextualize it within the broader realms of confidential computing and container orchestration. Confidential computing is a security paradigm that protects data in use, complementing encryption at rest and in transit. It employs hardware-based TEEs, such as Intel’s Software Guard Extensions (SGX), Trusted Domain Extensions (TDX), AMD’s Secure Encrypted Virtualization-Secure Nested Paging (SEV-SNP), and IBM’s Secure Execution, to create isolated enclaves where code and data are shielded from external access, even by the host operating system.

Containers, popularized by Docker and orchestrated by Kubernetes, have revolutionized application deployment with their lightweight, portable nature. However, traditional containers share the host kernel, making them vulnerable to kernel exploits or side-channel attacks. This shared-kernel model poses risks for sensitive workloads like financial transactions, healthcare data processing, or AI model training, where data confidentiality is critical.

Enter Kata Containers, an open-source project that runs containers in lightweight virtual machines, providing VM-level isolation without sacrificing container-like agility. Kata integrates with Kubernetes via the Container Runtime Interface (CRI), allowing pods to run in isolated VMs. But Kata typically requires nested virtualization on worker nodes, which can introduce performance overhead and isn’t always supported in cloud environments.

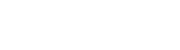

Confidential Containers, a Cloud Native Computing Foundation (CNCF) sandbox project, builds on Kata to extend confidential computing to Kubernetes pods. It encapsulates entire pods in confidential VMs, enabling workloads to run securely on untrusted hosts with minimal changes to existing applications. This project collaborates across vendors to standardize confidential computing at the pod level, supporting use cases in confidential AI, regulated industries, and secure supply chains.

Defining PodVM: The Core Concept

At the heart of Confidential Containers lies PodVM – a confidential virtual machine specifically designed to host one or more containers within a Kubernetes pod. Unlike traditional VMs that might run full operating systems, PodVM is optimized for efficiency, booting quickly and consuming resources tailored to the pod’s needs.

PodVM operates as an isolated execution environment, leveraging hardware TEEs to ensure that the pod’s runtime, data, and code are protected from prying eyes. For instance, in Intel TDX-based setups, PodVM uses memory encryption and access controls to prevent unauthorized access, even from privileged users on the host.

The term “PodVM” often interchangeably refers to the VM instance itself or the image used to instantiate it. Building a PodVM image involves customizing a base OS (like Ubuntu or RHEL) with Kata runtime components, security agents, and cloud-specific configurations. These images can be modified from marketplace offerings or built from scratch using tools like Packer or Docker.

In practice, when a user deploys a pod annotated for confidential execution, the system spins up a PodVM instead of running containers directly on the worker node. This VM then executes the pod’s containers using Kata, ensuring seamless integration with Kubernetes scheduling and networking.

The Peer Pods Approach: A Game-Changer for Cloud Deployments

One of the most innovative aspects of PodVM is the “peer pods” model, which decouples pod execution from worker nodes. In conventional Kata setups, VMs are nested within the worker node’s hypervisor, requiring nested virt support and potentially degrading performance due to double virtualization layers.

Peer pods address this by creating PodVMs as “peers” to the worker nodes, directly via the cloud provider’s APIs. This approach treats PodVMs as independent compute instances, running at the same level as worker VMs in the cloud infrastructure. For example, on Azure or GCP, PodVMs are provisioned as separate VMs, communicating back to the Kubernetes control plane through secure channels.

How does it work? The Cloud API Adaptor (CAA), a key component, acts as a bridge between Kubernetes and cloud providers. When a pod is scheduled, the CAA intercepts the request and uses cloud APIs to launch a PodVM. The PodVM boots with a minimal OS, installs the container runtime, and pulls the pod’s images. Networking is handled via virtual networks, and storage through persistent volumes adapted for remote access.

This model eliminates the need for bare-metal workers or nested virt, making it ideal for multi-tenant clouds. It also supports persistent volumes by mounting them directly into the PodVM, ensuring data integrity across restarts.

Architecture and Key Components

The architecture of PodVM-centric systems is modular, integrating several open-source components for security and orchestration.

Central to this is the Cloud API Adaptor, which enables peer pod creation across clouds like Azure, AWS, GCP, and IBM Cloud. CAA runs as a daemonset on worker nodes, handling VM provisioning, lifecycle management, and integration with Kubernetes.

Another crucial element is Trustee, an attestation and key management service. Trustee verifies the PodVM’s integrity using hardware attestation reports, ensuring the VM hasn’t been tampered with. It supports multiple TEEs and can provision secrets post-verification.

Kata Containers provide the runtime within the PodVM, emulating OCI-compatible container execution in a VM sandbox. Additional components like CSI wrappers handle storage, while webhooks enforce policies for confidential deployments.

Diagrams often depict the flow: A pod creation request triggers CAA to launch a PodVM, which then registers with the cluster. Attestation occurs via Trustee, and upon success, the pod runs securely.

Benefits and Real-World Use Cases

PodVM offers compelling advantages. Foremost is enhanced security: By isolating pods in TEE-protected VMs, it mitigates risks from host compromises, making it suitable for regulated sectors like finance and healthcare. Performance-wise, peer pods avoid nested overhead, with boot times as low as 17 seconds for multiple instances.

Use cases abound. In confidential AI, PodVM protects models during inference on shared clouds. For secure supply chains, it enables building artifacts in attested environments. OpenShift sandboxed containers, built on PodVM, allow enterprises to run untrusted code safely.

Implementation Across Platforms

PodVM is supported on major clouds. Azure offers it via Azure Red Hat OpenShift, using SNP or TDX. GCP integrates with GKE for simple setups. On-premises, it works with vSphere or bare metal using Intel/AMD hardware.

Future Outlook

As confidential computing matures will evolve with advancements like ARM CCA support and broader cloud integrations. Challenges like boot time optimization and component caching are being addressed. Expect wider adoption as regulations demand stronger data protections.

Conclusion

PodVM stands as a pivotal innovation, bridging containers and confidential computing for secure, scalable deployments. By embracing peer pods, organizations can confidently run sensitive workloads in the cloud, fostering trust in shared infrastructures. As the ecosystem grows, PodVM promises to redefine cloud security standards.